High Availability (HA)

VMware's high availability has a simple goal, if a ESXi server crashes all the VM's running on that server do as well, the other ESXi servers within the cluster will detect this and move the VM's onto the remain ESXi servers, once the failed ESXi server has been repaired and is back online, if DRS is enabled the cluster will be rebalanced if DRS has been set to automatic. The new features regarding HA in version 4.1 are

VMware's HA is actually Legato's Automated Availability Manager (AAM) software which has been reengineered to make it work with VM's, VMware's vCenter agent interfaces with the VMware HA agent which acts as an intermediary to the AMM software. vCenter is required to configure HA but is not required for HA to function (DRS and DPM do require vCenter). Each ESXi server constantly checks each other for availability, this is done via the Service Console vSwitch. HA and DRS work together to make sure that crashed VM's are brought back quickly online and to keep the vMotion events to a minimum. HA does require shared storage for your VM's, each ESXi server has to access to the VM's files, you also make sure that DNS is configured for both forward and reserve lookups.

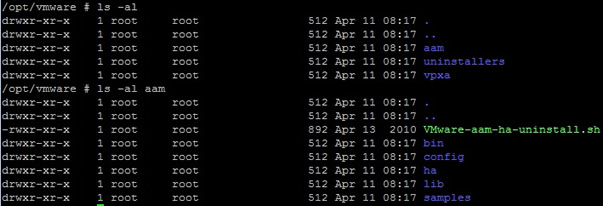

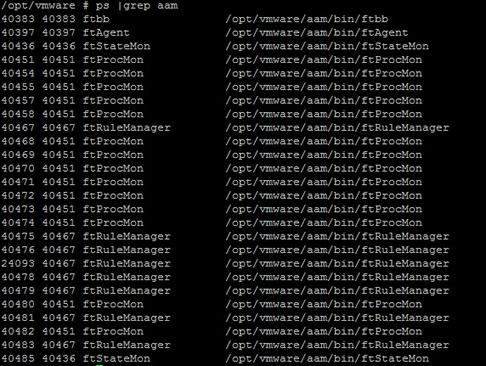

The below screen shot display the HA directory, if you notice you can see the aam dictory.

Normally in a cluster you have a redundancy of +1 or more, for example if you need say five ESXi servers to support your environment, then you should have six ESXi servers within the cluster, the additional ESXi server would help during a server crash or if you need to update/repair a server, thus there would be no degradation of your services.

If you have previous experience of clusters you would have heard of split-brain, basically this means that either one of more ESXi server become orphaned from the cluster due to network issues, this is also know as the isolated host. The problem with the split is that each part thinks it is the real cluster, VMware's default behavior is that the isolated host powers off all its VM's, thus the locks on the VM's files are then available for other ESXi servers to use. So how does a ESXi server know that its isolated, you could configure a default gateway and thus if it cannot get to this gateway then there is a problem, you can also use an alternative IP device as a ping source. Try and make sure that you have redundancy built into your Service Console network (multiple NIC's or even a second Service Console).

With version 4 you must have at least one management port enabled for HA for the HA agent to start and not produce any error messages, try and setup this port on the most reliable network.

To configure a management port follow below

| Management port | In vCenter select the ESXi server, then click the configuration tab and select networking from the hardware panel. Select the properties on vSwitch0 (or which ever one you prefer). Then select add and then select the VMKernel radio button, type a friendly name and make sure that you select the "use this port group for management traffic"

The next screen will ask you for a IP address, in the end you should have a management port group for each ESXI server. here are examples of both my ESXi servers

If you are still unsure how to set this up then look at my network section to get more information on port groups. |

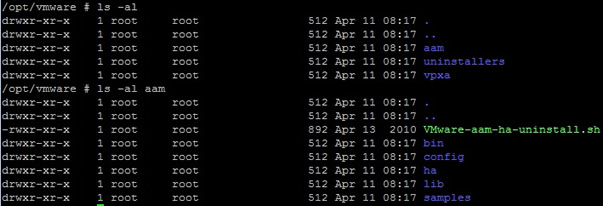

Now that you have a management port group setup you are ready to configure HA, you should have a cluster already setup (if not then see my DRS section), select the cluster, then the DRS tab, then select the edit tag, when you select the "Turn on VMware HA" you should see several more options appear in the left panel, we will discuss these later

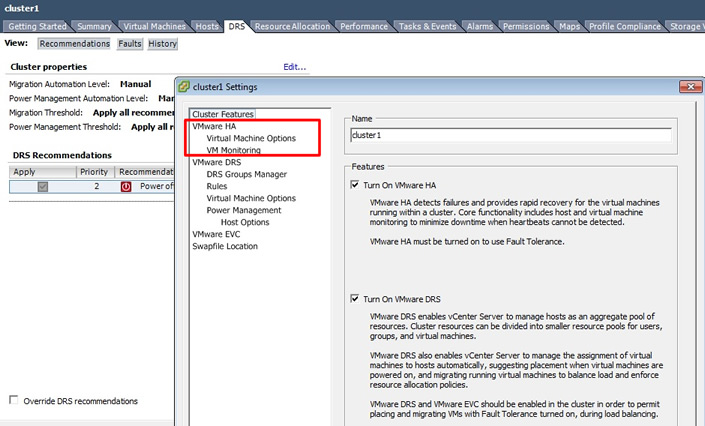

When you click on OK, you can watch the progress in the "recent tasks" panel at the bottom

A lot of additional processes were started on both ESXi servers, notice the /opt/vmware/aam directory

There are three option panels that you can configure HA with

| HA options | The "enable host monitoring" does not stop the HA agents, it basically stops the network monitoring between the ESXi servers, thus if you need to perform some maintenance on the network or default gateway you may need to untick this option while you carry out your repairs, etc. You can control the number of ESXi server failures you tolerate until the HA clustering stops powering on VM's on the remaining servers, you have the choices

|

| HA virtual machine options | You can set different startup priorities for VM's and also configure the isolation response should an ESXi server suffer from the split-brain phenomena, use the drop down lists to make your choices

|

| HA VM monitoring | You can automatically restart a VM if the VMware tools heartbeat is not being received within a set time period. Remember through it will only power on/restart the VM, what happens with the applications within the VM is down to you.

|

The methods to test a cluster are

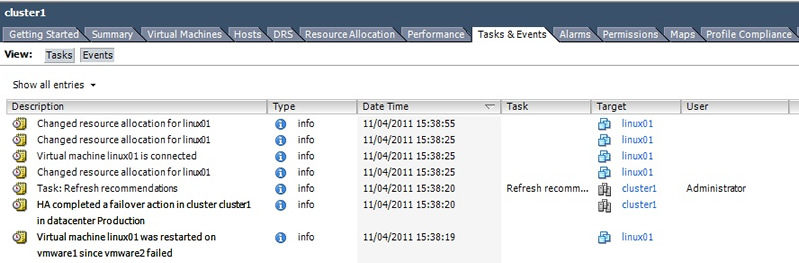

I disconnected the network from vmware2 and waited a little while, eventually the cluster picked up that vmware2 was no loner available and migrated the only running VM to vmware2, you can check the progress in the "Tasks and Events" tab

I will probably come back to this section when I get more experienced with the HA, perhaps detailing some errors and problems, I will also cover the various advanced HA settings that you can manually enter.