Introduction

In the old days data centers were full of physical servers doing nothing or at most running at only 10% capacity, this was a waste of money (power, cooling, support contracts, etc) and space. Companies are always looking to reduce the overall costs and that is were virtualization comes in.

Virtualization has been around since the 1970's, it was not until the late 1990 that virtualization became a hot topic once again, in 1999 a company called VMware released VMware Workstation which was designed to run multiple Operating Systems at the same time on a desktop PC. In 2001 VMware release two servers versions called VMware GSX server (requires a host O/S to run) this was later renamed VMware server and VMware ESX server this had it's own VMKernel (known as the hypervisor) and was run directly on the hardware, also a new filesystem was created called VMware Machine File System (VMFS). Since the first release we had had ESX server 2.0 and ESXi 3.5 (2007) which brings us to the latest version VMware ESXi server 4.1 (2010).

VMware ESXi server has seen many changes over the years and the latest versions includes support for the following, I will be going into much more depth with the below topics.

Going back to my first paragraph, virtualizing many servers reduces costs, administration and space and as most companies are now trying to reflect a green image, virtualizing whole environments (including Production environments) seem to be the way things are going.

So what is virtualization, what it is not is emulation or simulation

| Emulation | Emulation is the process of getting a system to work in an environment which it was never designed for. An example of this is that there is a old Atari emulation software that you can download and play old Atari games. In the back ground translations are carried out in order for you to play the games, this has major performance issues. Why emulate, because it is cheaper than to rewrite the entire code. |

| Simulation | Simulation gives you the appearance of a system, as opposed to a system itself. For an example NetApp now have a NetApp simulator that appears and works like actual Netapp hardware but it is not. Another example is a flight simulator it gives the appearance of a real plane but obviously it is not. |

| Virtualization | Virtualization allows you to create virtual environments (Linux , Windows) and to make them appear as if they were the only environment using the physical hardware. A virtual machine will have a BIOS (Phoenix BIOS), NIC's, Storage controller, etc and the virtual machine has no idea that its not the only one using the physical hardware. The ESXi server will intercept the virtual interrupts and redirect to the physical hardware inside the ESX host, this is know as binary translation. |

The latest CPU's from Intel and AMD assist in virtualization having there own technologies, see the appropriate web site for more information

VMware decide to remove the service console (COS) from its latest kernel (hypervisor), which allows the hypervisor not to have any dependencies on the operating system, which improves reliability and security and the need for many updates (patches). The result is a much more stream-lined O/S (90MB approx) which means that it can be embedded onto a hosts flash drive and thus elimating the need for a local disk drive (more greener environment).

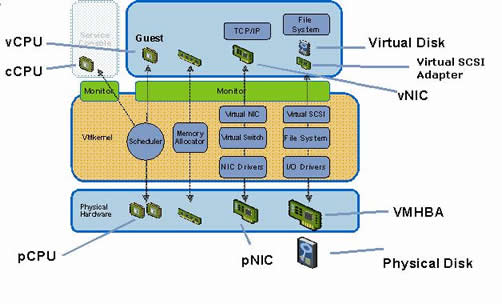

The heart of a ESXi is the VMKernel, this controls the access to the physical hardware, it is similar to other O/S, were processes will be created, file systems are used. The VMkernel is designed for running virtual machines, it focuses on resource scheduling, device drivers, and I/O stacks. You can communicate with the VMkernel via the vSphere API which the vSphere client or vCenter can use.

VMware ESXi server has a number processes that are started, I managed to obtain information on most of them but there a few that I need to investigate further

| vmkeventd | A utility for capturing VMkernel events |

| vmklogger | A utility for logging VMkernel events |

| vpxa | This process is responsible for vCenter Server communications, commands received are passed to the hostd process for processing. |

| sfcbd | The Common Information Model (CIM) system monitors hardware and health status. The CIM is a standard set of API's that remote applications can use to query the health and status of the ESXi host. sfcb-vmware_int |

| vmware-usbarbitrator | VMware USB Arbitration Service, Allows USB devices plugged into the HOST to be usable by the guest. |

| vobd | |

| vprobed | a utility for running the vProbe daemon. VProbes is a facility for transparently instrumenting a powered-on guest operating system, its currently running processes, and VMware's virtualization software. VProbes provides, both dynamically and statically. |

| openwsmand | Openwsman is a system management platform that implements the Web Services Management protocol (WS-Management). It is installed and running by default. |

| hostd | The ESXi server host agent, this allows the vSphere client or vCenter access to the host. It consists of two processes hostd-poll |

| vix-high-p | The VIX API (Virtual Infrastructure eXtension) is an API that provides guest management operations inside of a virtual machine that maybe running on VMware vSphere, Fusion, Workstation or Player. These operations are executed on behalf of the vmware-tools service that must be running within the virtual machine and guest credentials are required prior to execution. |

| vix-poll | |

| dropbearmulti | Dropbear includes a client, server, key generator, and scp in a single compilation called dropbearmulti, this is basically the ssh stuff |

| net-cdp | CDP is used to share information about other directly-connected Cisco networking equipment, such as upstream physical switches. CDP allows ESX and ESXi administrators to determine which Cisco switch port is connected to a given vSwitch. When CDP is enabled for a particular vSwitch, properties of the Cisco switch, such as device ID, software version, and timeout, may be viewed from the vSphere Client. This information is useful when troubleshooting network connectivity issues related to VLAN tagging methods on virtual and physical port settings. |

| net-lbt | A debugging utility for the new Load-Based Teaming feature |

| net-dvs | A debugging utility for Distributed vSwitch |

| busybox (ash) | BusyBox is a software application that provides many standard Unix tools, much like the larger (but more capable) GNU Core Utilities. BusyBox is designed to be a small executable for use with the Linux kernel, which makes it ideal for use with embedded devices. It has been self-dubbed "The Swiss Army Knife of Embedded Linux" Busybox utilities: |

| helper??-? | |

| dcui | Direct Console User Interface (DCUI) process provides a local management console for the ESXi host. |

VMware iSCSI proccess |

|

| vmkiscsid | Vmware Open-iSCSI initiator daemon, see below for the files that are used /etc/vmware/vmkiscsid/iscsid.conf |

| iscsi_trans_vmklink | |

| iscsivmk-log | |

VMware vMotion |

|

| vmotionServer | |

VMware High Availability |

|

| agent | this agent is installed and started when a ESXi server is joined to a HA cluster. |

There are a number of ports that are used by VMware ESXi, here is a list of some of the common ones used

22 |

allows access to ssh |

53 |

used for DNS |

80 |

This provides access to a static welcome page, all other traffic is redirected to port 443 |

443 |

This port acts as a reverse proxy to a number of services to allow for Secure Sockets Layer (SSL). The vSphere API uses this port for communications. |

902 |

Remote console communication between vSphere client and ESXi host for authentication, migrate and provision |

903 |

The VM console uses this port |

5989 |

Allows communication with the CIM broker to obtain hardware health data for the ESXi host. |

8000 |

vMotion requests |

ESXi can be deployed in two formats

| Embedded | your ESXi server comes preload on a flash drive, you simple power on the server and boot from the flash drive. You configure the server from the DCUI, after which you can then manage the server via vSphere client or vCenter. |

| Installable | this requires a local host disk or as with the new 4.1 version you can boot via a SAN, thus you can have a diskless server. You start the install either via CD or PXE boot. You can also run pre-scripted installations which mean you can configure the server in advanced. Again vSphere client or vCenter can be used to manage the ESXi server. |

ESXi now comes with two powerful command-line utilities vCLI and PowerCLI and also the new Tech Mode Support (TSM) which allows low-level access to the VMkernel so that you can run diagnostic commands.

| vCLI | this is a replacement for the esxcfg commands found in the service console. It is available for both Linux and Windows. |

| PowerCLI | this extends Windows PowerShell to allow for the management of vCenter Server objects, PowerShell is designed to replace the DOS command prompt, it is a powerful scripting tool that can be used to run complex tasks across many ESXi hosts or virtual machines. |

ESXi Server has the below features, although ESXi is free some features will require a license.

The VMware vNetwork Distributed Switch (dvSwitch) provides centralized configuration of networking for hosts within your vCenter server data center. This means that you can make changes in the vCenter can can then be applied to a number of ESXi hosts or virtual machines. Network I/O control is a new network traffic management feature for dvSwitches it implements a software scheduler within the dvSwitch to isolate and prioritize traffic types on the links that connect your ESXi server to the physical network. It can recognize the following types of traffic

Network I/O control uses shares and limits to control traffic leaving the dvSwitch, which can be configured on the resource Allocation tab. Limits are imposed before shares and limits apply over a team of NIC's. Shares on the over hand, schedule and prioritize traffic for each physical NIC in a team

VMware also uses Load-Based Teaming (LBT), which is used to avoid network congestion on a ESXi server, it adjusts manually the mapping of virtual ports to physical NIC's to balance network load leaving and entering the dvSwitch. LBT will attempt to move one or more virtual ports to a less utilized link within the dvSwitch.

The vStorage API for Array Integration (VAAI) is a new API available for storage partners to use as a means of offloading specific storage functions in order to improve performance. It supports the following

| Advanced configuration setting | Description | |

| Full Copy | DataMover.HardwareAcceleratedMove | this enables the array to make full copies of data within the array without requiring the ESXi host to read or write data. Full copy can also reduce the time required to perform a Storage vMotion operation, as the copy of the virtual disk data is handled by the array on VAAI-capable hardware and does not need to pass to and from the ESXi hosts. |

| Block Zeroing | DataMover.HardareAcceleratedInit | the storage array handles zeroing out blocks during the provisioning of virtual machines. Block zeroing also improves the performance of allocating new virtual disks, as the array is able to report to the ESXi server that the process is complete immediately while in reality it is being completed as a background process, without the VAAI the ESXi server would have to wait until it was completed which could take a while on some virtual machines. |

| Hardware-assisted locking | VMFS3.HardwareAcceleratedLocking | this provides an alternative to small computer systems interface (SCSI) reservations as a means to protect VMFS metadata. This provides a more granular option to protect VMFS metadata than SCSI reservations, it uses a storage array atomic test and set capability to enable fine-grain block-level locking mechanism. Any VMFS operation that allocates space, such as starting or creating a virtual machine results in VMFS having to allocate space, which in the past has required a SCSI reservation to ensure integrity of the VMFS metadata on datastores shared by many ESXi hosts. |

Hosts profiles are used to standardize and simplify how you manage your vSphere host configurations, you can capture a policy that contains the configuration of networking, storage, security settings and other features from a properly configured host, you can then use this policy against other hosts to maintain consistency.

Although I am only covering ESXi 4.1 the table below shows the differences between older versions of VMware.

ESX 3.5 |

ESX 4.0 |

ESX 4.1 |

ESXi 3.5 |

ESXi 4.0 |

ESXi 4.1 |

|

| Service Console (COS) | Present |

Present |

Present |

Removed |

Removed |

Removed |

| Command-Line interface | COS |

COS + vCLI |

COS + vCLI |

RCLI |

PowerCLI + vCLI |

PowerCLI + vCLI |

| Advanced troubleshooting | COS |

COS |

COS |

Tech Support Mode |

Tech Support Mode |

Tech Support Mode |

| Scripted installations | X |

X |

X |

X |

||

| Boot from SAN | X |

X |

X |

X |

||

| SNMP | X |

X |

X |

Limited |

Limited |

Limited |

| Active Directory integration | 3rd party in COS |

3rd party in COS |

X |

X |

||

| Hardware Monitoring | 3rd part COS agents |

3rd part COS agents |

3rd part COS agents |

CIM providers |

CIM providers |

CIM providers |

| Web Access | X |

X |

||||

| Host Serial Port Connectivity | X |

X |

X |

X |

||

| Jumbo Frames | X |

X |

X |

X |

X |

ESXi can be installed on a flash drive, small local or remote disk drive and as such ESXI differs from other O/S. The system partitions for ESXI are summarized below, this may differ slightly depending if installed on a flash drive or hard disk

When your ESXi server first starts, SYSLinux is loaded, SYSLinux looks at the file boot.cfg which is located both on hypervisor1 (mounted as /bootbank) and hypervisor2 (mounted as /altbootbank), SYSLinux uses the parameters build, updated and bootstate to determine which partition to use to boot ESXi, if you have not upgraded then it will use /bootbank if you have upgraded then it will use /altbootbank, this then reverses when you update again. If there is a problem after a upgrade you can always boot from the other previous partition. At the initial loading VMware Hypervisor screen you can load a prior version by pressing Shift+R, then press shift+Y to revert back, you should see the message "Fallback hypervisor restored successfully".

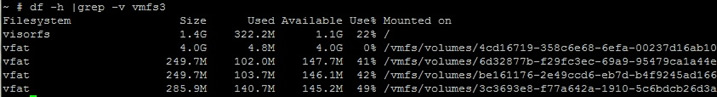

After SYSlinux determines which system image to boot, boot.cfg is read to determine the files that are used to boot the VMKernel, once loaded into memory the storage is not accessed again. if you run df -h you get a listing of the filesystems that ESXi has mounted, listed first is visorfs which is the RAM disk that ESXi has created, the four vfat partitions are bootbank, altboot, scratch and store.

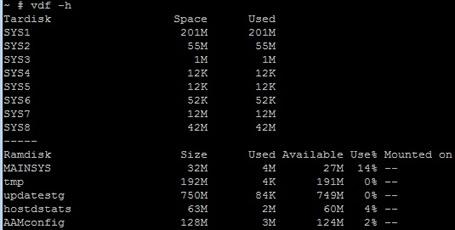

The command vdf -h is new and gives details on the RAM disks, the listing below shows the tardisks that ESXi has extracted to create the filesystem, these entries correspond to the Archive and State file types in boot.cfg. The four mounts are MAINSYS is the root folder, hoststats is used to store realtime performance data on the host and updatestg is used as storage space for staging patches and updates.