Storage (Local, iSCSI and SAN)

In this section I will be covering different types of storage (see below), I will also be discussing the Virtual Machine Filesystem System (VMFS) and how to manage it.

There are a number of new features with version 4.1

HBA Controllers and Local Storage

There are number of ways to attach storage to an ESXi server, I will first cover local storage and HBA controllers. In my environment I purchased two local disk drives (500GB) to use as local storage, there are many companies that have no requirement for an expensive SAN solution, thus a server with a large amount of storage is purchased. This storage can be used to create virtual machines, please note it does not allow you to use features such as vMotion as you require shared storage for this, however it is an ideal solution for a small companies wishing to reduce the number of servers within there environment.

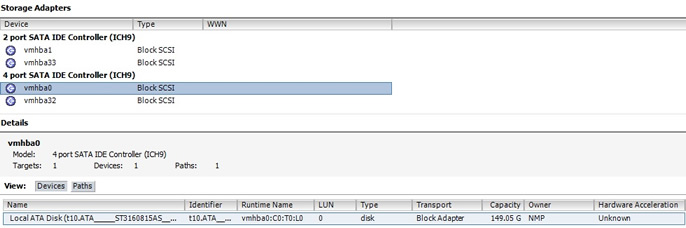

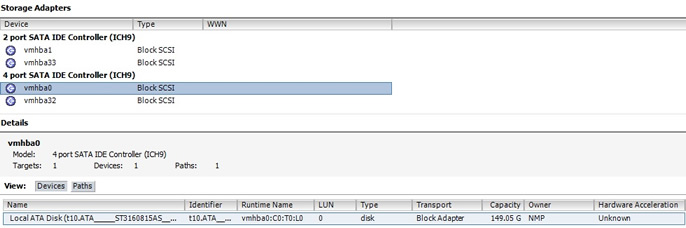

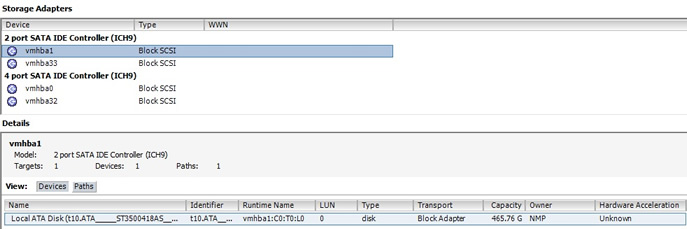

When you first install ESXi you have the choice to select where the O/S will be installed, after which you can then configure additional storage to be used for virtual machines, but firstly I want to talk about controllers (HBA's). When you open a vSphere client then select the ESXi server -> configuration and then storage adapters you will be presented of a list of storage controllers that are attached to your system. On my HP DC7800 it has found 4 controllers, 2 of which I have attached a 146GB SATA disk (used for the O/S) and a 500GB SATA disk (used for the VM's).

The below image is the 146GB I use for the ESXi O/S, it is attached to the vmhba0 controller and is of block SCSI type, I try to keep this purely for the O/S and keep all virtual machines off this disk.

The second disk is a 500GB seagate disk and is attached to vmhba1, again it is block SCSI type disk, this is where I keep some of my virtual machines. If you look closely enough you can see the disk type and model number in case you have to replace the disk like for like, in my case it is a ST3500418AS seagate disk.

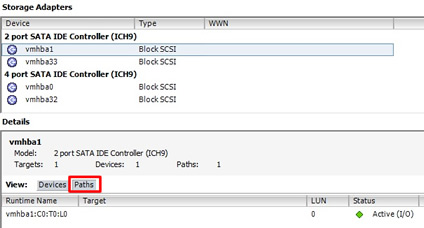

By selecting the paths tab you can expand to see how the disk is connected, if this device was multipath'ed you have all the paths listed here, as you can see we have one active path. We will setup a multipath device in my iSCSI section later and the various options that come with multipathing.

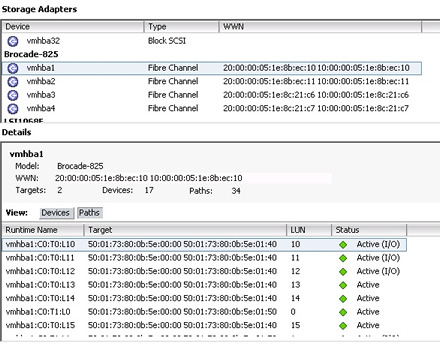

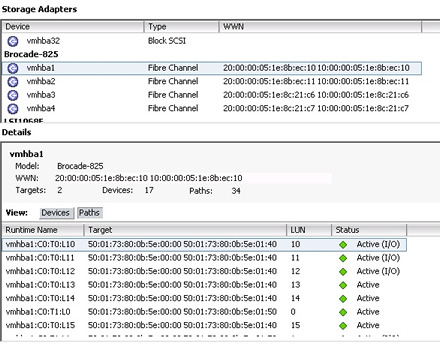

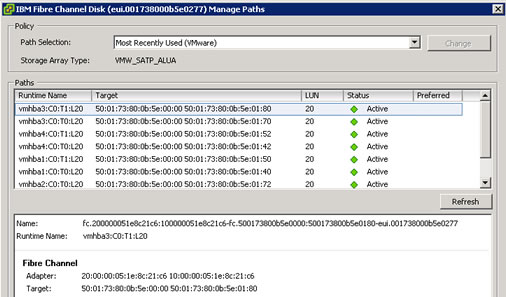

Here is an example of a multipath'ed server I have at work, here you can see in the targets lines I have 17 devices but 34 paths, in this example I have a brocade-825 HBA controller connected to a IBM XIV SAN also notice the World Wide Number (WWN) which are associated with fibre disks. VMware ESXi server also supports it own LUN masking, that is if your SAN does not support this already.

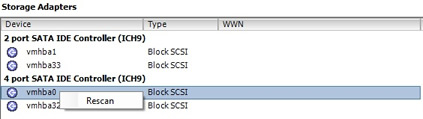

If you have a server that you can hot swap hard disks, you can rescan that particular controller to pick new drives or replaced drives, just right-click on the controller and select rescan, as seen in the image below. You would also use this option to rescan a fibre HBA controller to pickup any new LUN's.

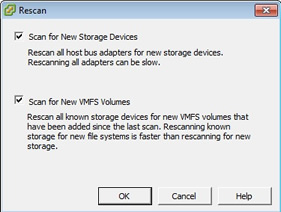

You can also rescan all controllers by selecting the "Rescan All..." icon in the top right-hand corner of the storage adapters tab, you get the options below, I generally tick both and then select OK, any new devices will then be added and can be used.

The last piece of information is regarding the naming convention on the controllers, the ESXi server uses a special syntax so that the VMKernel can find the storage it is using, it is used by local storage, SAN and iSCSI systems. The syntax of a device is vmhbaN:N:N:N:N which is a sequence of numbers that tell the VMKernel how to navigate to the specific storage location. The syntax of the path is below, please note this is different to older versions of ESX.

| vmhbaN:N:N:N | this is the same as reading as vmhbaN:C:T:L:V N - HBA number So for an example vmhba1:C0:T0:L10 would mean vmhba controller 1, channel 0, target 0, LUN 10 |

I have already covered some of the SAN disk usage in the above section, when you install a fibre HBA controller you should be able to see it in the storage adapter section of the ESXi server, the image below is a ESXi server with a brocade HBA installed. You can see the WWN of the HBA controller and the attached LUN's, clicking on the paths tab displays all the LUN's attached to this controller. As I mentioned above you can add additional LUN's by simply rescanning the HBA controller (providing LUN masking is setup correctly on the SAN). A ESXi server can handle up to 256 LUNs per controller, from LUN0 to LUN 255, on some SAN's LUN0 is a management LUN and should not be used, unless you want to run management software within your virtual machine.

Here is a multipathing screen shot of one of the disks, I will be covering multipathing in the next section and showing you how to set it up and configure it with my test environment.

you can rescan from the command line

| rescan from the commandline | esxcfg-rescan <adapter name> esxcfg-rescan vmhba1 |

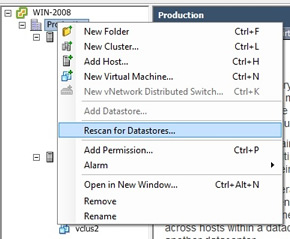

One problem with the older versions of ESX is that if you created a new LUN for 32 ESX servers you had to go into each one and rescan, version 4.1 you can use vCenter to rescan many hosts with a single click, just right-click the vCenter object (in my case it was called Production) and select "Rescan for Datastores", you may get a warning message as it may take some time to complete.

iSCSI is the cheaper version to fibre, it basically still offers a LUN as a SAN does, what makes it different is the transport used to carry the SCSI commands (uses port 3260, you may need firewall's opening). iSCSI uses the normal network cabling infrastructure to carry the SCSI commands, this means you do not need any additional equipment (however good quality network cards should be purchased), these are known as VMware software initiator, you can buy a NIC with iSCSI support these are know as hardware initiators. VMware iSCSI software is actually part of the CISCO iSCSI Initiator Command Reference, the entire iSCSI stack resides inside the VMKernel. The initiator begins the communications (the client) to the iSCSI target (disk array), a good NIC will have what is called a TCP Offload Engine (TOE), which improves performance by removing the load from the main CPU.

There are a number of limitations with iSCSI that you should be aware of

iSCSI does support authentication using Challenge Handshake Authentication Protocol (CHAP) for additional security and iSCSI traffic should be on its own LAN for performance reasons.

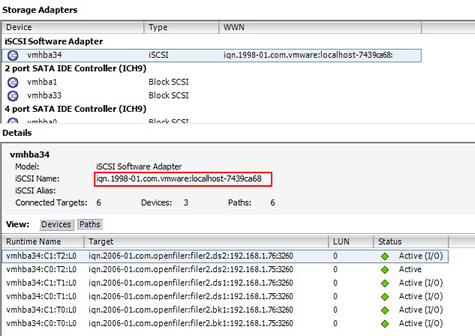

iSCSi has it own naming convention, its uses the iSCSI Qualified Name (IQN), it a bit like a DNS name or reverse DNS. The below image is my current iSCSI setup as mentioned in my test environment section I am using openfiler as a iSCSI server. The IQN name in my case is iqn.1998-01.com.vmware:localhost-7439ca68, it is configured the first time you configure the software adapter it can be changed if you want a different name.

| IQN | My IQN iqn.1998-01.com.vmware:localhost-7439ca68 breaks down as iqn - is always the first part Basically the IQN is used to ensure uniqueness |

To configure the ESXi server to use iSCSI with software initiator requires setting up a VMKernel port group for IP storage and connecting the ESXi server to the iSCSI adapter

| iSCSI VMkernel port group | To setup a VMKernel storage port group follow below

If you followed the above you should have something looking like below

|

Next we have to connect the ESXi server to an iSCSI software adapter

| connecting ESXI to an iSCSI software adapter | First you need to select the iSCSI storage adapter

select properties to open the below screen then select configure, this is the iSCSI IQN name, you can change this if you want, I left my as the default

select the "Dynamic Discovery" tab and select the add button, enter the IP address of your iSCSI system, leave the default port 3260 unless this is different. You may have noticed that I have two connections this is to the same iSCSI server, this is related to multipathing and we will be looking at this later. Once you have entered the details and clicked OK you will be prompted for a rescan

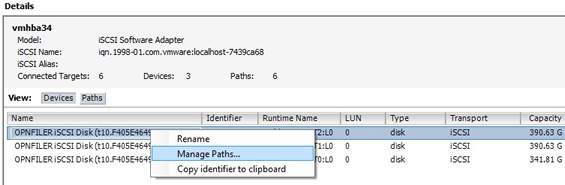

You can get more details about your LUN's in the storage adapter panel as below, here you can see 3 attached LUN's two 390GB LUN's and one 341GB LUN, also if you notice I have 3 devices but 6 paths which means I am using multipathing.

|

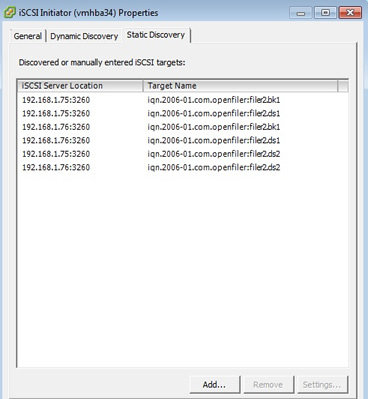

Lastly I want to talk about multipathing, I have setup my openfiler server to use two interfaces, to multipath all you do is carry out the above task "connecting ESXI to an iSCSI software adapter" for both IP addresses, after a rescan the "Static Discovery" tab you should display all the LUN's attached, in my case I have 3 LUN's of which each one is multipath'ed (hence why you see 6 targets).

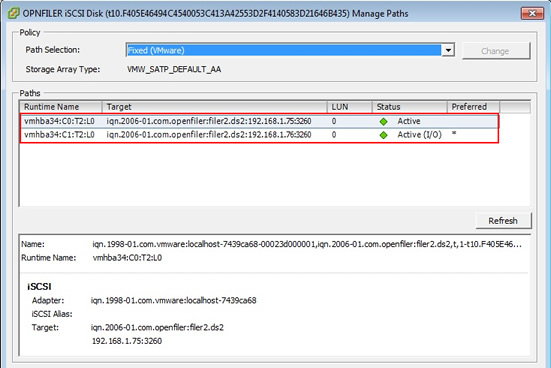

You can confirm that each device has two connections by selecting the disk and right clicking and selecting "manage paths"

You then get the below screen, I have two active paths, one using IP address 192.168.1.75 and the other 192.168.1.76 (the preferred one) both are using port 3260.

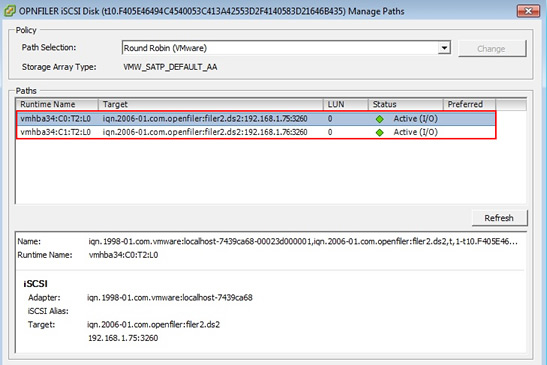

By default the ESXi server will use a fixed path for the I/O but you can change this, other options include "round robin" which would make use of both network adapters, just select the down arrow on the "path selection" then click on the change button to update, as you can see in the below image I have change this disk to use "Round Robin" both paths are now active and there is no preferred path.

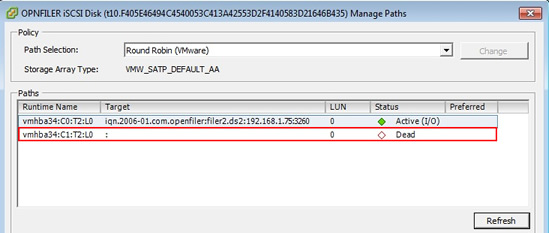

So what happens when you lose a path, we get a dead connection, the ESXi server continues to function but now has less resilience

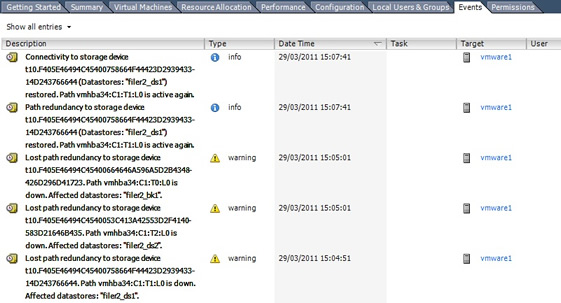

All of this can also be viewed from the events tab, you can see me disconnecting and then reconnecting the network cable, the events can be capture by 3rd software like BMC patrol and then monitored with the rest of your environment.

There are a number of commands that you can use to display and configure the multipathing

| Detailed information | esxcfg-mpath -l |

| List all Paths with abbreviated information | esxcfg-mpath -L |

| List all Paths with adapter and device mappings | esxcfg-mpath -m |

| List all devices with their corresponding paths | esxcfg-mpath -b |

| List all Multipathing Plugins loaded into the system | esxcfg-mpath -G |

| Set the state for a specific LUN Path. Requires path UID or path Runtime Name in --path | esxcfg-mpath --state <active|off> |

| Used to specify a specific path for operations | esxcfg-mpath -P |

| Used to filter the list commands to display only a specific device | esxcfg-mpath -d |

| Restore path setting to configured values on system start | esxcfg-mpath -r |

iSCSI is very easy to setup on VMware, the main problems normally resides in the iSCSI server/SAN and granting the permissions for the ESXi server to see the LUN's (this is known as LUN masking), I will point out again that you should really use a dedicated network for your iSCSI traffic this will improve performance greatly.

VMware Filesystem System (VMFS)

VMFS is VMware's own proprietary filesystem, , it supports directories and a maximum of 30,720 files in a VMFS volume, with up to 256 VM's in a volume. A VMFS volume can be used by virtual machines, templates and ISO files. VMFS has been designed to work with very large files such as virtual disks, it also fully supports multiple access which means more than one ESXi server can access the same LUN formatted with VMFS without fear of corruption, SCSI reservations is used to perform the file and LUN locking. VMware improved in version 3 to significantly reduce the number and frequency of these reservations, remember these locks are dynamic and not fixed or static.

When ever a ESXi server powers on a virtual machine, a file-level lock is placed on its files, the ESXi server will periodically go and confirm the the VM is still functioning - still running the virtual machine and locking the files, this is known as updating it's heartbeat information. If an ESXi server fails to update these dynamic locks or its heartbeat region of the VMFS file system, then the virtual machine files are forcibly unlocked. This is pretty critical in features like VMware HA, if locking was not dynamic the locks would remain in place when a ESXi server failed, HA would not work. In the latest version you can have up to 64 ESXi servers in the same DRS/HA cluster.

VMFS uses a distributed journal recording all all the changes to the VMFS, if a crash does occur it uses the journal to replay the changes and thus does not carry out a full fsck check, this can prove to be much quicker especially on large volumes.

You should always format new volumes via the vSphere client this keeps the system from crossing track boundaries known as disk alignment. The vSphere client automatically ensures that disk alignment takes place when you format a VMFS volume in the GUI. Note however that if you are going to be using RDM files to access raw or native LUN's disk alignment should be done within the guest operating system.

One big word of warning, you have the hugh potential to screw things up pretty badly in VMware, make note of any warnings and make sure that your data is secured (backed up) before making important changes, there is no rollback feature in VMware.

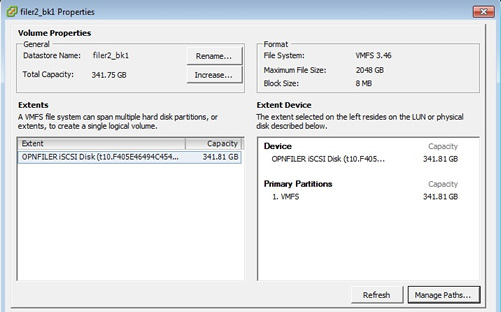

When you have a new disk and you go to format it you will asked if you want to set the block size (1MB, 2MB, 4MB or 8MB), this controls the maximum file size that can be held in a VMFS volume (256GB, 512GB, 1024GB or 2048GB). Block sizes do not greatly affect performance but do affect maximum virtual disk (VMDK) file sizes. I will discuss virtual machine files in my section on virtual machines. My suggestion would be to set them to 8MB this allows you to have very large VMDK files.

You can use the entire disk or make the VMFS volume smaller than the LUN size if you wish, but it is not easy to get that free space back and that's if anyone remembers were it was. It is possible to create multiple VMFS volumes on a single LUN, this can affect performance as it can impose a LUN-wide SCSI reservation which temporary blocks access on multiple VMFS volumes where only one VMFS volume might need locking, so try to keep one VMFS volume per LUN.

You will be required to set a datastore name during the format process, VMFS volumes are known by four values: volume label, datastore label, vmhba syntax and UUID. Volume labels need to be unique to ESXi servers whereas datastore labels need to unique to vCenter. So try to create a standard naming convention for your company and give meaningful names.

Enough talking lets format a new iSCSI LUN

| Format a new VMFS volume | In the vSphere client or vCenter select the ESXi server -> configuration -> storage, then select the "Add Storage" link, you should get the screen below, select Disk/LUN (iSCSI, Fibre and local disk), then click next

Select the disk/LUN that you want to format, I have only one free disk to add

Here I have no options but to continue

Now give the disk/LUN a label, I have called mine "filer2_bk1", basically means that this disk is from iSCSI server filer2 and it is my first backup disk. We will be using this later when cloning and snapshotting VM's, etc.

Here is where you set the block size, I have chosen 8MB and decided to use the entire disk

Now we get a summary screen, most important make sure you are happy there is no going back

The disk/LUN is formatted and then appears in the disk storage screen, as seen below

|

There are a number properties that you can change on a already created volume, increase size, rename it, manage the paths (as see in the iSCSI multipathing), just right click on the volume and select properties and you should see the screen below, It also details various information on the volume maximum file size, file system version number. In older versions of ESX you had to increase the VMFS volumes using extents, it is now recommended that you use the increase button on the properties window (see below image) you can double check to see if you have any free space by looking at the Extent device panel in the right-hand window, the device capacity should be greater than the Primary Partition size, also make a note of the disk name in the left panel as this is the list you will be presented with when increasing the volume size.

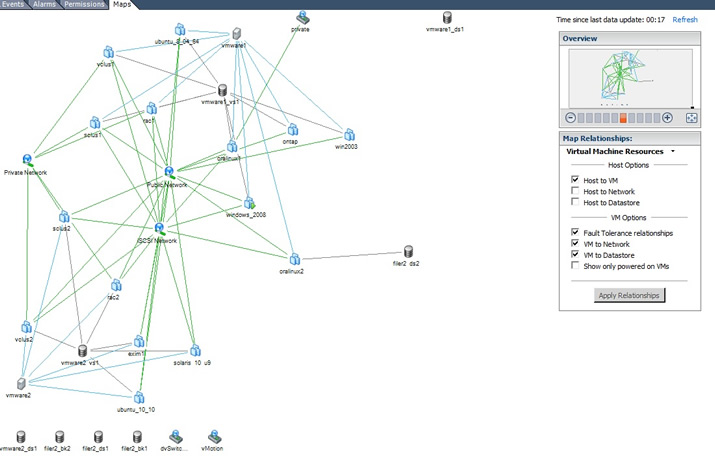

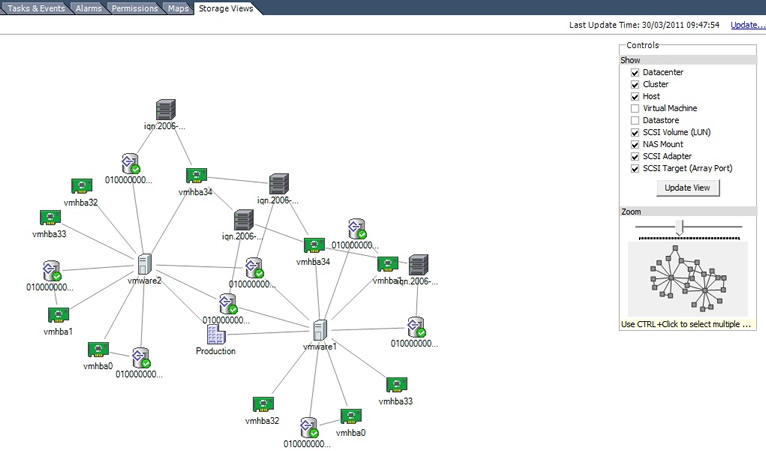

There are a number of places that you can get details on the storage within you ESXi server and this is where vCenter does have advantages over the vSphere client. One of the nice features of vCenter is the map diagrams that you can view, there are many options you can change as you can see in the below image, so that you can display just the information you need, however the diagram can become messy if you have lots of ESXi server, Storage, etc.

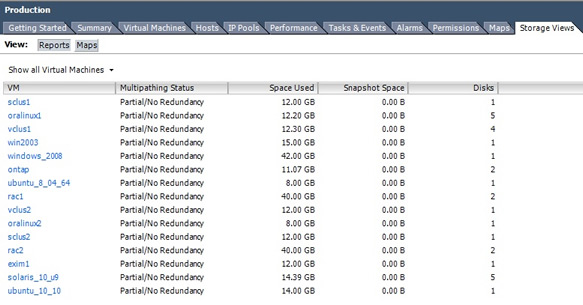

Another nice view in vCenter is the folder (my case the Production folder) -> storage view screen, here you can either look at the report or the maps, again the map has many options and it looks nice in your documentation.

Report |

Map |

|

|

The rest of the diagrams I have used in this section have come from using the vSphere client software, the vCenter software gives you additional views and the added bonus of see all the ESXi server views in one place, otherwise you would have to login to each ESXi server.