Finally if you have been following the series we come to the most enjoyable part, vMotion, storage vMotion and Cold Migration. Before we start I just want to recap on my test environment I have two ESXi servers connected to a openfiler iSCSI server which will supply the shared storage, I will also be using the VM I created in my virtual machine section to show you how to migrate storage using Storage vMotion.

To say we are moving a VM from one ESXi server to another with vMotion is a bit of a lie, we don't actually move the data at all, this stays on the shared storage, it's only the VM's memory contents that are moved from one ESXi server to another. The VM on the first ESXi server is duplicated on to the second ESXi server and then the original is deleted, during vMotion first ESXi server creates an initial pre-copy of memory from the running VM into the second ESXi server, during the copy process, a log file is generated to track all changes during the initial copy phase (it is referred to as a memory bitmap). Once the VM's are practically at the same state, this memory bitmap is transferred to the second ESXi server, before the transfer of the bitmap file the VM on the first ESXi server is put into a quiesced state. This state reduces the amount of acclivity occurring inside the VM that is being migrated, it allows the bitmap to become so small that it can be transferred very quickly, it also allows for rollback if a network failure occurs, this means that the migration will have to be successful or unsuccessful. When the bitmap has been transferred the user is then switched to the new ESXi server and the original VM is removed from the first ESXi server.

You need the following to perform a vMotion all requirements are for both ESXI servers involved

- Shared storage visibility between the source and destination ESXi servers, this also includes any RDM-mapped LUN's

- A VMKernel port group on a vSwitch configured with 1Gbps on the vMotion network, it will require a separate IP address

- Access to the same network, preferably not going across switches/routers, etc

- Consistently labeled vSwitch port groups

- Compatible CPUs

If one of the above has not been met, then vMotion will fail. I just want to touch on the network quality, I highly recommend that you use a dedicated 1GB network for the vMotion, although it is possible to perform a vMotion over a 100MB network it can fail and regularly, also VMware will not support it. Also CPU compatibility is also a show stopper, try to make sure that all your ESXi server that you plan to use vMotion are the same, it is possible to vMotion across different CPU types but it can be a real pain and expensive option only to find out that it does not work, buy for compatibility.

There are a few requirements for the VM's

- Inconsistently named port groups, vMotion expects the port group name for both the vMotion vSwitch and VM port group to be spelled the same and in the same case, for example vMotion and VMotion are different.

- Active connections that use an internal switch, by active I mean that the VM is configured and connected to the same internal switch.

- Active connection to use a CD/DVD that is not shared, vMotion cannot guarantee that a CD/DVD will be connected after the switch

- CPU affinities, vMotion cannot guarantee that the VM will be able to continue to run on a specific CPU number on the destination server

- VMs in cluster relationship regarding the use of RDM files used in VM clustering, MSCS used to have problems with this

- No visibility to LUN, where RDM files are not visible to both ESXi servers

- Inconsistent security settings on a vSwitch/port group, if you have mismatched settings vMotion will error

- snapshots are fully supported you may be warned if reverting when a VM is moved, but as long as the data is on the shared storage there should be no problems

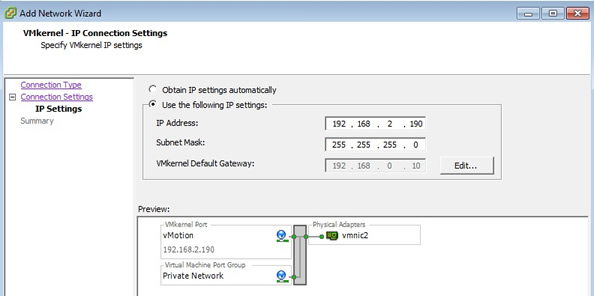

vMotion requires a VMKernel port group with a valid IP address and subnet mask for the vMotion network. A default gateway is not required (you should really have all ESXi servers that you are going to use with vMotion on the same subnet) but VMware does support vMotion across routers or WAN's.You can create a vMotion port group on an existing vSwitch.

So lets create the vMotion port group

| |

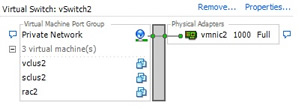

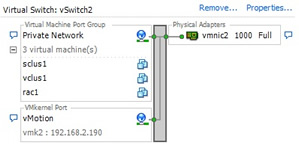

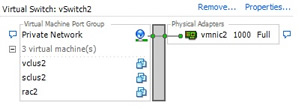

I have two ESXi servers (vmware1 and vmware2) connected to a netgear GS608 router both at 1GB, I have already created a private port group on vSwitch2, this is a dedicated network for my vMotion traffic, if you need a refresher on port group and vSwitches check out my networking section.

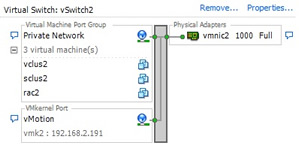

Remember we will be performing this on both ESXi servers, I will be using vSwitch2 to create my vMotion port group, this uses a dedicated network

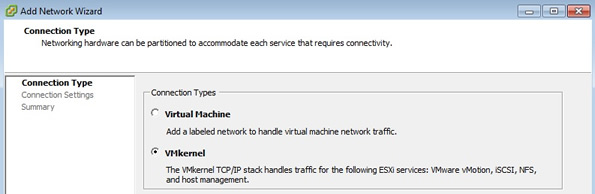

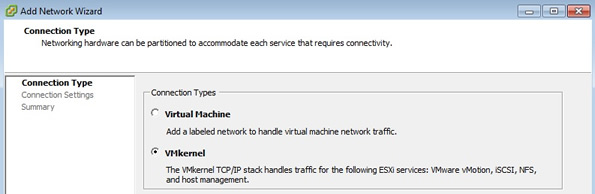

Select the properties tag and then select the add button and the below screen should appear, select VMkernel (if you notice you should be able to see the VMware vMotion in the list)

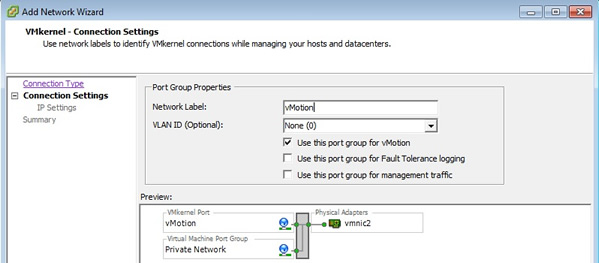

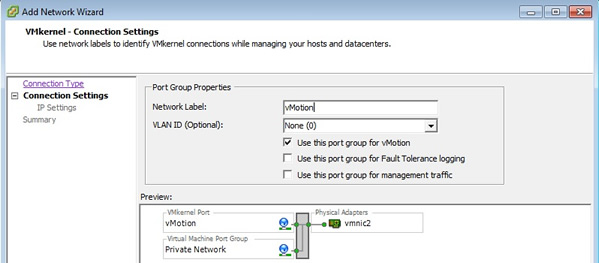

select a meaningful name, make sure that it is spelt the same on both ESXi servers, it is case sensitive. Then tick the "use this port group for vMotion".

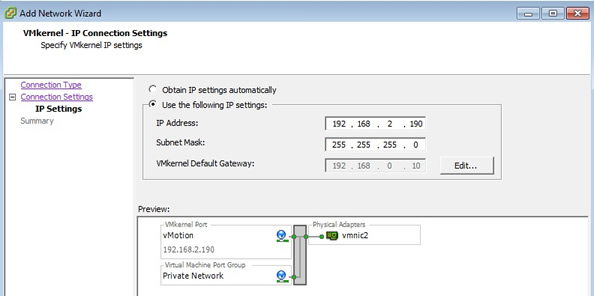

Enter a IP address, both must of course be different, the gateway is optional unless you are going over WAN's.

Lastly we get the summary page

Here are both ESXi server vMotion port groups, spelt the same and different IP addresses

ESXi server (vmware1) |

ESXi server (vmware2) |

|

|

|

Would you believe me that this is all there is to it, well it is, you can now hot migrate any VM that is configured on the shared storage and meets the above requirements., lets migrate a VM

| VM migration using vMotion |

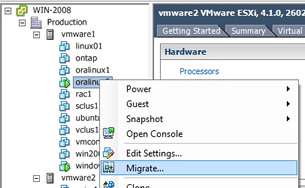

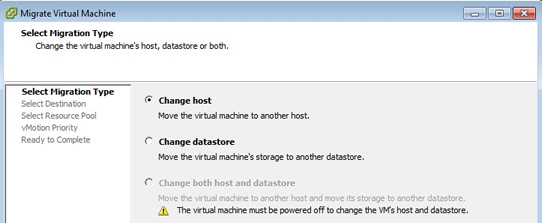

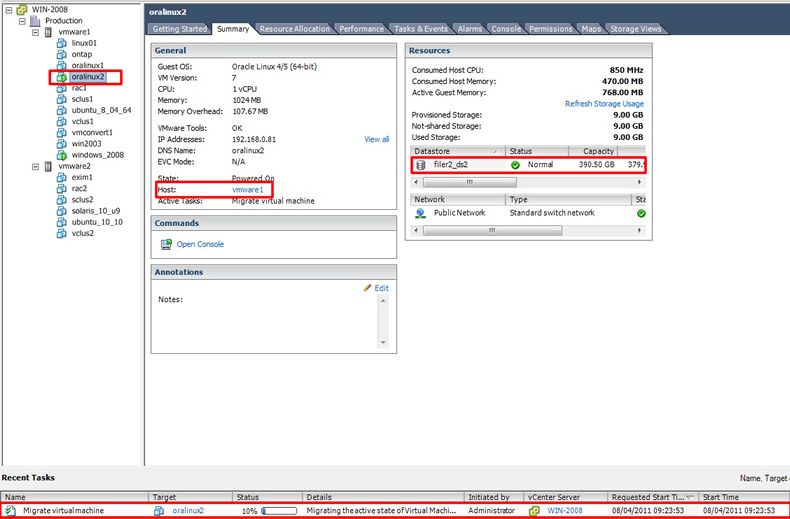

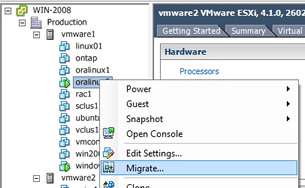

I am going to use an existing VM I have configured on the shared storage, notice that the VM is powered on and running, I right-click on the VM and select migrate

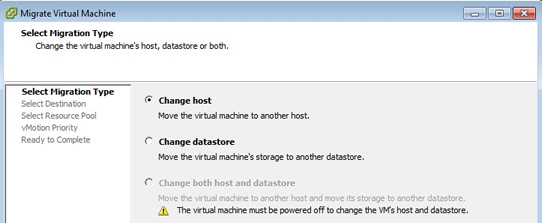

the below screen will appear, as we are only changing the ESXi host we select "change host"

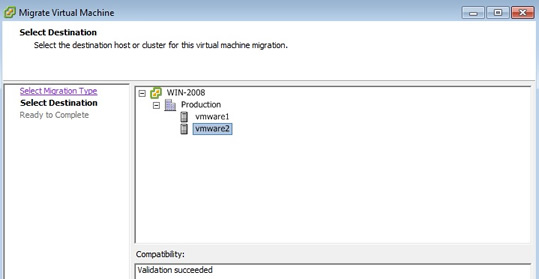

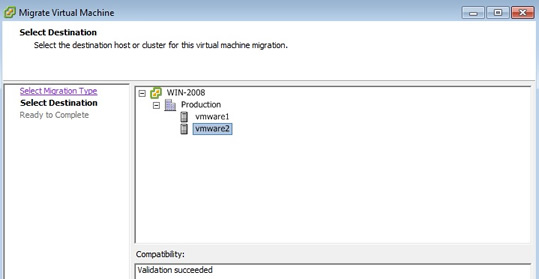

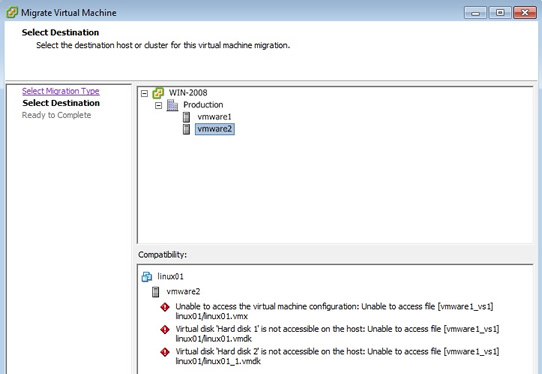

Next we select the destination host, in the case vmware2, if for any reason the VM cannot be migrated any errors will be produced in the button screen, other you will see "Validation succeeded"

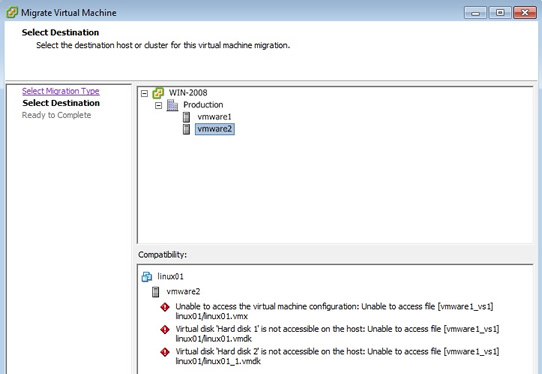

Here is an example of a VM failing a vMotion, this is because I used local storage, which we will correct in my storage vMotion section below

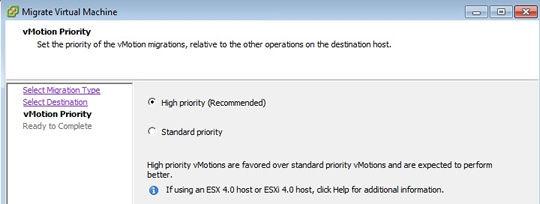

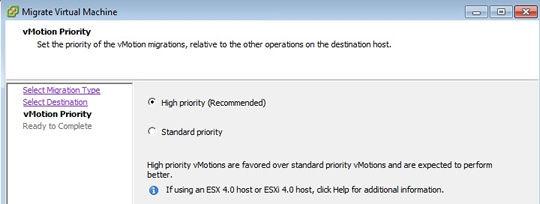

Next comes a priority screen, depending on the resources available and time of the day (you may want standard priority during business hours) will decide the priority level

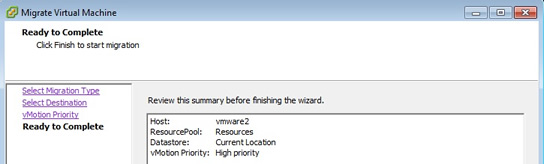

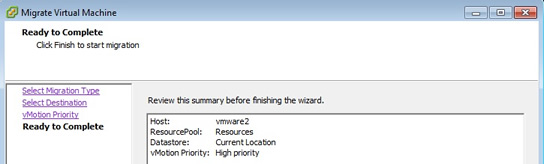

Lastly a summary screen appears

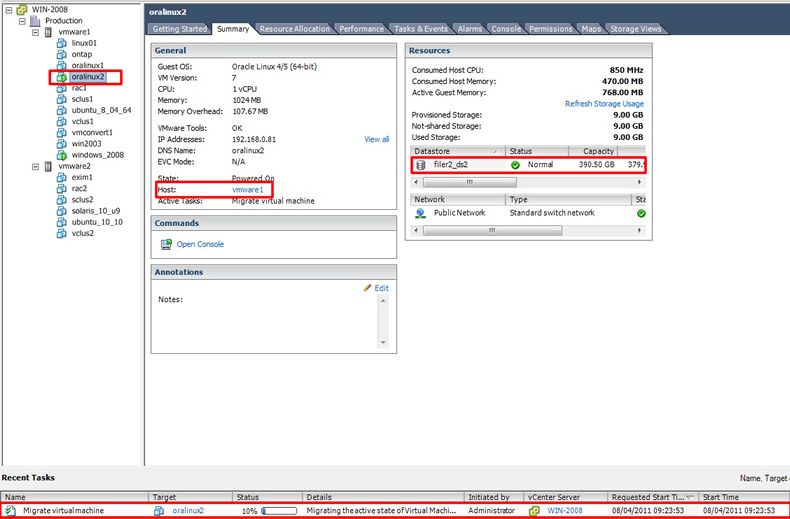

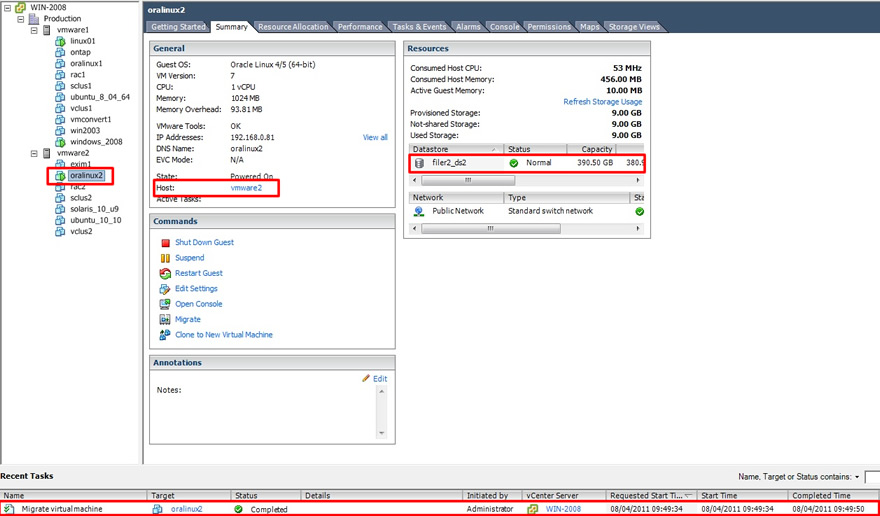

You can see that the VM was running on vmware1 and used the filer2_ds2 storage datastore. You can see in the Recent tasks panel at the bottom, it is being migrated from this ESXi server.

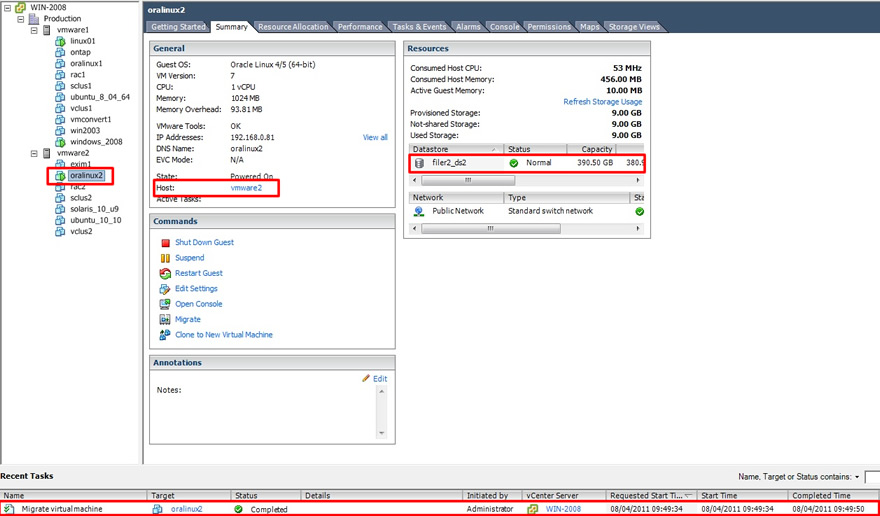

Finally the VM is successfully migrated, you can clearly see that it is now running on vmware2 and it is still using the same datastore (filer2_ds2), the whole process only took 16 seconds, this of course may take longer depending on the VM memory size and usage.

The other option is to drag and drop the VM from one server to another this will also perform the same task.

If you receive errors like the one below, then check your vMotion network, make sure both ESXi servers have different IP address and are able to contact each other (use putty to ping each other), to produce this error I gave both ESXI servers the same vMotion IP address and then tried to migrate a VM, it paused a while then chucked out this error message

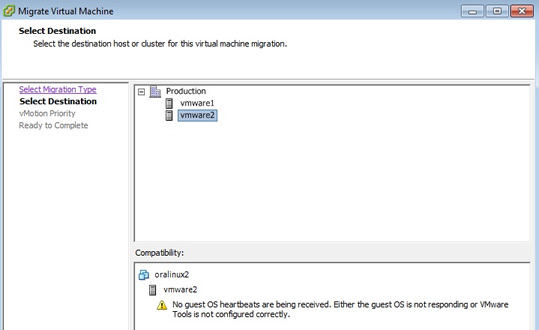

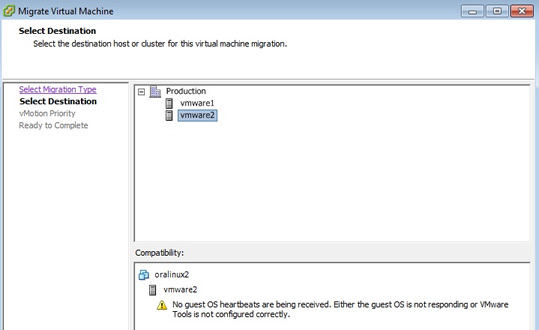

You might also see this error when trying to migrate a VM, this basically means that the VMware Tools has not established a heartbeat yet, generally either the VM has just been started or just migrated, give the VM time to settle down a little while and retry the migration again

|

Storage vMotion

It is possible to move the storage of a VM and locate it to another storage area without even powering off, this is ideal when you want to migrate onto a new SAN or iSCSI server, thus upgrading your storage is a breeze, there are a number of other reasons to use SVMotion

- Decommission an old array whose lease or warranty has expired

- switch from one storage type to another, say from Fibre channel to iSCSI

- Move VMs or virtual disks out of a LUN that is running out of space

- Ease future upgrades from one version of VMFS to another

- Convert RDM files into virtual disks

Like vMotion there are a number of requirements

- Spare memory and CPU cycles, when you move storage additional memory is required to carry out the process, also additional CPU cycles will be used

- Free space for snapshots, storage vMotion is a copy/ file delete process so make sure there is enough free spare space available

- Time it will take longer to copy the files across, the larger the files the longer it will take

- Concurrency try now to burden the ESXI server to much by running multiple storage vMotion's at once, maximum of 4 at any one time

If you followed me on creating a VM in my virtual machine section, you will have noticed that I configured this on local storage, I am now going to move this to a shared storage area, which means that this VM can use vMotion

| storage vMotion |

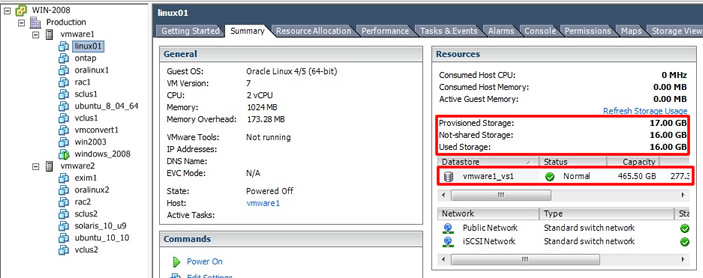

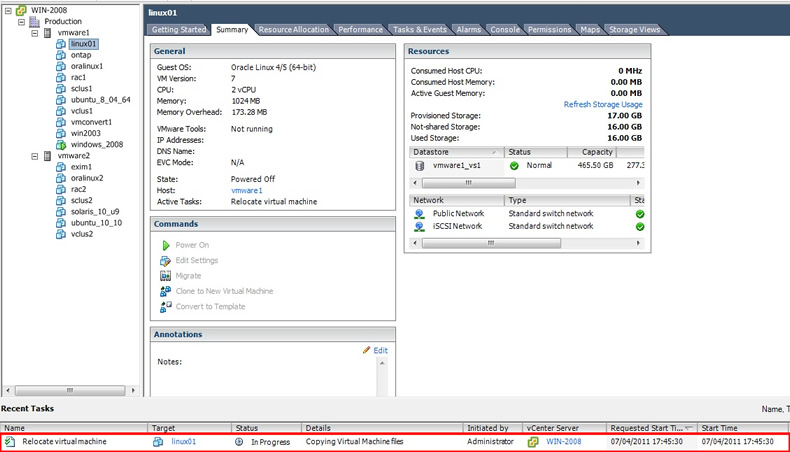

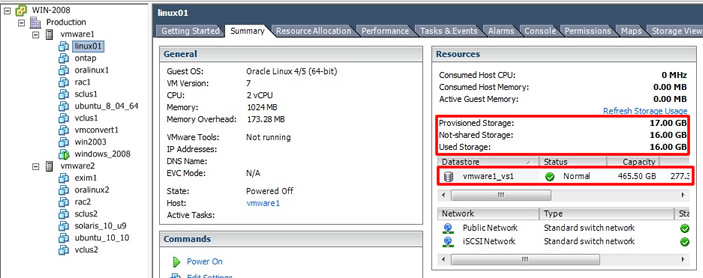

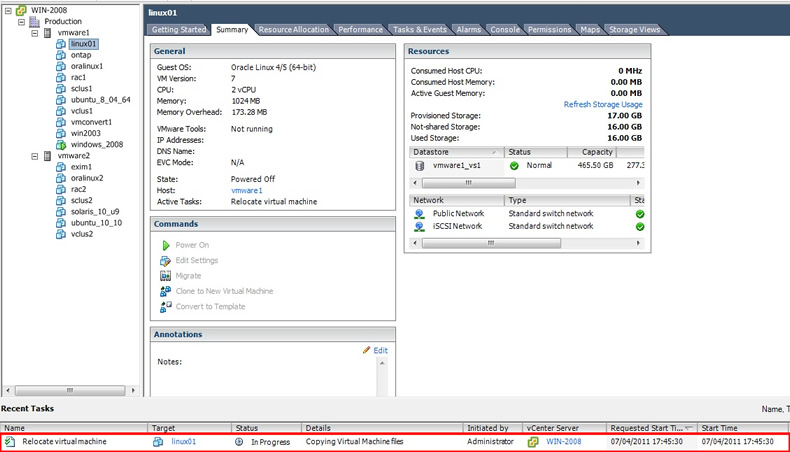

The VM I have is using a local datastore (vmware1_vs1) and has 17GB of storage allocated to it, the VM is powered down but you can have the VM running if you wish

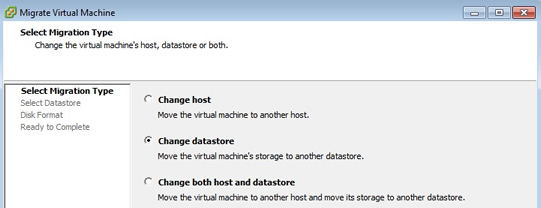

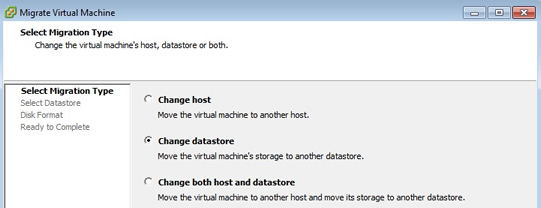

Right-click the VM and select migrate, which should bring up the below screen, select "change datastore"

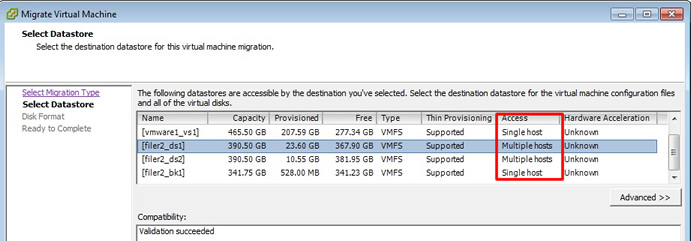

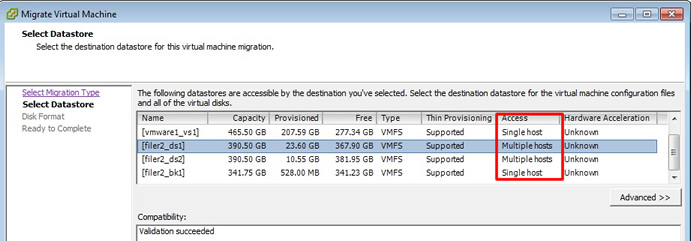

Select the datastore you wish to migrate to, also notice the access column and what type of access each datastore has, in this case I am selecting a datastore that has multiple access

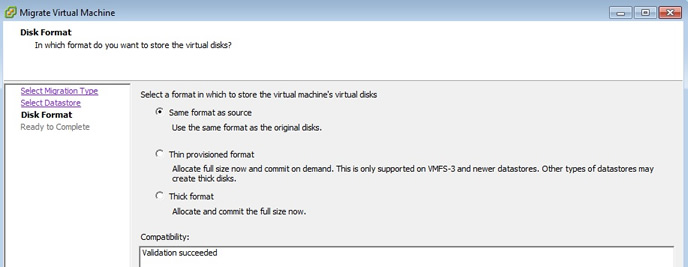

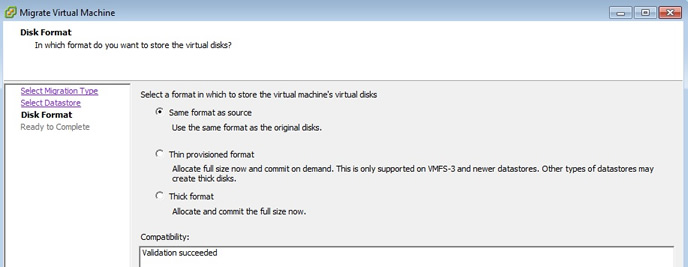

I have already spoken about disk provisioning in my virtual machine section, here I select to keep the same

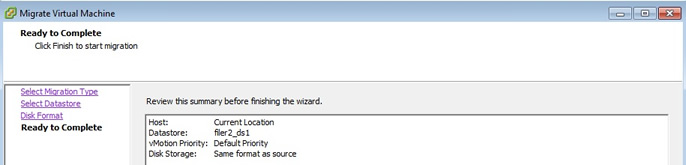

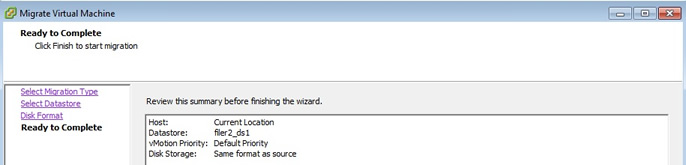

Lastly the summary screen appears

You can watch the progress in the "recent tasks" panel at the bottom

When the migration has been complete, the datastore will change and a completed note will appear in the "recent tasks" panel, if you look carefully it only took 1 minute and 22 seconds to migrate a 17GB VM with a few clicks of a button HOW COOL IS THAT!!!!!

You can use the commandline if you like, which is ideal if you want to script several storage vMotion's in one go, which is handy when you are migrating from one storage array to another, the command is "svmotion.pl" this command has many options check them out using the man page

|

Cold Migration

If you cannot meet the hot migration requirements you can always revert to cold migration this is where the VM is powered off, and as it is not as stringent there are less requirements that need to be meet. As long as both ESXi servers have visibility to the same storage, then cold migration can be very quick and the VM downtime kept to a minimum. You can also perform a ESXi server migration and a Storage vMotion at the same time but it will take a little longer, I personally only migrate servers that are not complex no databases, not clustered, generally web servers, SMTP servers, middleware servers are ideal candidates, but that's up to you.

I am not going to cover cold migration as I performed this in the above example in the storage vmotion section, it is the same as a hot migration but with the VM powered off. I personally perform cold migrations on complex VM setups like MSCS and cluster setups (Veritas, Sun) that use quorum disks, also database VM's as these generally have large amounts of memory, don't get me wrong it is possible to perform a hot migration on these types of setups it's just my preference to perform a cold migration.